Data Deluge: Managing Demand-Supply Pressures

Demands within data and analytics are variable and characterized by frequent changes. Constantly questioning and challenging data keeps the analytics process active and adaptable. Hence, businesses are pouring trillions into enhancing their data-driven approaches. However, as per a McKinsey survey, only 8% manage to expand their analytics strategies effectively to extract value from their data.

This means companies require improved data capabilities at a faster rate. The rapid growth of data sources, volumes, and variety—known as big data drives supply-and-demand challenges in data while increasing the pressure on data leaders and practitioners to deliver.

More specifically, rapidly escalating data supply and demand strains data engineers creating data pipelines, analysts needing analytics-ready data, and business managers reliant on data for their roles. Data pipelines address these concerns and are very prevalent in the data analytics landscape.

The Question Is – Are Employing Data Pipelines Enough?

Organized data pipelines form the basis for various projects, including exploratory data analysis, data visualizations, and machine learning tasks. The global data pipeline tools market is anticipated to reach USD 48.3 billion by 2030, registering a CAGR of 24.5% from 2023 to 2030.

Businesses employ data pipelines to convert raw data into valuable insights, facilitating efficient data management. A data pipeline involves data ingestion, storage, validation, and analysis. It tracks data flow across its lifecycle and undergoes preparation and processing stages to transform it into models, reports, and dashboards. In short, the pipeline captures the operational side of data analysis.

Have you observed any major airport during peak travel times for ten minutes and wondered how chaotic it seems? However, behind the scenes, airlines and airport operators effectively use real-time data about flight arrivals and departures. Data pipelines facilitate such coordination that helps manage various aspects, from gate availability to luggage handling.

However, the real hero is the resiliency of the data pipelines that provide critical real-time data insights for on-demand decision-making. Yet, given today’s chaotic data ecosystems, achieving this is more challenging than ever.

The Complexity of Juggling Multiple Data Pipelines

One seemingly straightforward data pipeline process may often involve numerous steps, ranging from dozens to potentially hundreds. Imagine the complexity of managing multiple pipelines simultaneously owing to complex demands by analysts, scientists, and data-centric applications going through numerous steps!

Primary reasons why managing multiple pipelines creates complexities are:

- As data volume grows, grasping its content and tracking transformations in the pipeline becomes more challenging and eventually tricky to comprehend.

- Data scattered across various systems and platforms complicates data transfer through pipelines. Managing larger volumes and diverse data becomes more crucial for organizations, leading to increased reliance on it. This leads to challenges in accessing, controlling, and identifying the appropriate data.

As software delivery processes have surged and data volumes have increased, the pace of business has intensified. Correspondingly, methodologies like DevOps have arisen to tackle these challenges by merging software development and operations, promoting collaboration, automating tasks, and speeding up delivery.

If DevOps Streamlines Software Delivery, Why Not Streamline Data Delivery?

DevOps has evolved substantially since its inception, profoundly shaping the software development and delivery processes within organizations; DevOps has empowered developers.

But what about data teams? DevOps united developers, QA, and operations personnel to address software delivery challenges collaboratively. What about data delivery challenges?

As time passed, the industry increasingly acknowledged the disparities among data teams (including engineers, scientists, and analysts) and the operational difficulties they confronted. Data teams face distinct challenges compared to developers when interacting with operations teams. Developers focus primarily on code quality, while data teams handle data integration , quality, and governance .

Consider this example: Various businesses in the travel sector – airlines, hotels, car rentals, and online agencies – rely on data pipelines for seamless data transfer, processing, and analysis. Additional options for reserving accommodations or rental cars often appear when booking a flight through an airline’s website. Similarly, using a credit card for travel services enables booking flights, reserving rooms, and renting cars, among other options. Efficient operations in this industry require closely integrated systems among all participants.

Operational difficulties can be expected in the travel industry if the data pipeline isn’t well-automated or disparities exist among data teams.

For instance, if engineers and analysts use different tools or methodologies to process data, it can create friction in sharing, understanding, or utilizing the data effectively for improving operational processes or customer services. Also, the possibility that engineers structure data differently from analysts can lead to misunderstandings and errors in data pipeline processing.

So, even with active data pipelines, businesses in the sector desperately needed data operations management to produce contextual insights. They had to rethink how they handle data, from processing and storing it to its end utilization. The journey from raw data to actionable insights poses challenges for data teams as data complexity grows. This implies that a well-integrated and collaborative approach among data professionals (similar to DevOps) is essential for a seamless data pipeline and efficient operations in the travel sector.

Data Pipeline Challenges that Demand Automation

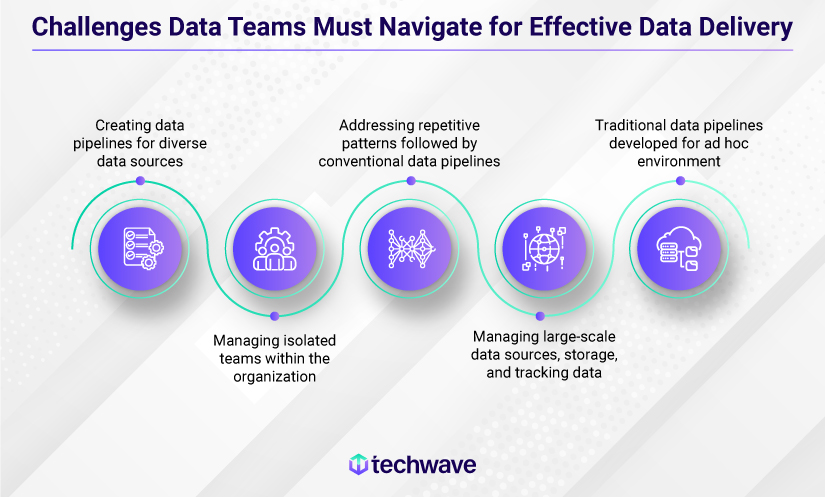

Following are a few data pipeline challenges companies usually face and must overcome with the help of automation.

- Creating data pipelines that operate efficiently in distinctive data settings, involving a blend of cloud resources and diverse data sources, is a whole new ballgame.

Companies usually hold customer data processed differently based on specific needs and purposes. Analysts use PowerBI or Tableau pipelines to create brand personas from customer demographics and survey data. Financial analysts utilize spreadsheets to compute departmental or company-wide costs, revenues, and profits. Simultaneously, the ML team integrates data with GIS, census, and socioeconomic datasets to forecast churn and enhances it for analysts’ marketing analysis.

Suppose a company moves its customer service software from Salesforce to SAP. This change might necessitate the IT team to modify how customer data is stored and accessed. This change also requires staff to learn new processes. If the transition is chaotic, it may cause delays in responding to customer queries, affecting satisfaction. Poor coordination between IT and customer service can disrupt services, impacting customer experience. - Many organizations have isolated teams managing specific aspects of data operations. Bridging these gaps requires a shift in mindset towards fostering collaboration among data engineers and scientists, DevOps teams, and other stakeholders.

For example, with the rise of Data Lakes came Data Labs, introducing Data Scientists and Data Engineers. These changes granted teams more freedom, yet deployment remains a key challenge as analytics ecosystems differ from software development frameworks in IT delivery criteria. While converting an idea into a proof of concept (POC) is possible, deploying it poses a persistent problem. - The conventional development of data pipelines follows a repetitive pattern. Developers, data science engineers, and analysts craft individual pipelines to access similar data sources frequently. As a result, multiple yet almost identical data pipelines exist, each managed within its specific project or task.

Data pipelines commonly struggle with collaboration and project synchronization. The traditional approach to building data pipelines prioritizes the project’s outcome. This involves designing ETL/ELT and analytical algorithms and assembling necessary tools for execution. However, much work is conducted independently, resulting in repetitive tasks. Consequently, users end up with various versions of the project after completion. - Managing large-scale data sources, storage, and tracking data transformation pose significant challenges in data analytics. As data volume increases, understanding its content and activities within the data pipeline becomes increasingly complex, making documentation and tracking laborious, especially when meeting various stakeholders’ needs. Traditionally, data pipelines address this issue through an unstructured manual approach.

- Traditional data pipelines are often developed ad hoc to serve specific purposes. While this approach allows for quicker starts, these pipelines frequently break down due to unexpected data or source changes. Additionally, detecting errors requires significant effort, causing downtime and impacting the company’s reputation and customer base. This underscores the need for version-controlled data products integrated with automatic continuous integration and delivery (CI/CD), including testing as an integral part of the build process.

Data Pipeline Automation – Need of The Hour

The key lies in evolving your data and analytic culture to ensure swift and reliable data delivery, meeting the knowledge needs of business managers consistently with accurate data and models. It’s crucial to recognize that the demand for data & analytics will continue to grow, and without data pipeline automation, scaling development, deployment, and operation won’t suffice to meet this demand.

Automating data pipelines is critical to surmount these challenges. To achieve this, we must progress beyond the DevOps methodology and embrace the DataOps methodology, a topic we’ll explore in our upcoming blog .