AI Automation heavily relies on machine intelligence. This reliance is influenced by two crucial elements: accuracy and trust. Upholding these factors is vital for AI automation’s success. Check our previous blog, “Data Governance in the AI Era: Ensuring Trustworthy and Ethical AI Solutions” .

Generative AI – Taking Automation to the Next Level

By 2025, Gartner predicts that generative AI will contribute 10% of the total generated data, a significant increase from its share of less than 1% in 2021. The emergence of genAI models has sparked global enthusiasm. It has revolutionized content creation, such as text, images, codes, simulations, and other media formats, empowering businesses to produce personalized, high-quality content at scale.

Also, McKinsey’s Global Survey 2023 reports that 40% of survey participants intend to boost their overall AI investments due to advancements in genAI and harness the full potential of their data resources.

Despite these investments, the difficulty of extracting substantial value from data and AI initiatives remains to be solved. Why? Trust Issues.

As businesses delve deeper, the initial excitement is replaced by the practical challenges of leveraging genAI for tangible business advantages. Specifically, the focus now shifts towards mitigating risks associated with generative AI and establishing effective governance measures for trustworthy solutions—an imperative call to action.

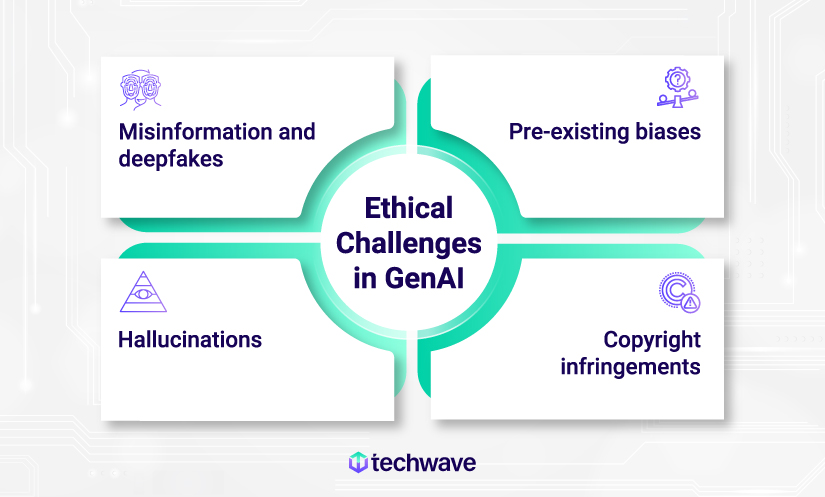

The Ethical Dilemma of Generative AI

Let’s try to understand the ethical dilemma of genAI with the help of some researched facts on one of its use cases from among many – image manipulation:

- A study by the University of Warwick found that individuals failed to detect approximately 35% of manipulated images depicting real-world scenes.

- Another study indicates that individuals possess only a 50% probability of accurately discerning whether AI-generated content is real or fake.

- More recently, the proportion of deepfakes (discussed later in the article) in North America has more than doubled from 2022 to Q1 2023, specifically from 0.2% to 2.6% in the U.S. and from 0.1% to 4.6% in Canada.

AI-synthesized text, audio, images, and videos are being used for the unauthorized sharing of personal content, financial scams, and spreading false information. Currently, the rate of AI-generated images produced daily surpasses 2 million , which means the potential for harm is also rapidly escalating. This has raised serious ethical concerns about AI among business leaders, the government, and common people.

Furthermore, genAI has disseminated inaccurate financial data, hallucinated fake court cases, created biased images, and triggered many copyright-related issues.

However, companies are eagerly adopting it to enhance productivity and enrich customer experiences in diverse ways. Within this wave of AI innovation lies a fresh concern for enterprises: How can they ensure the security of trusted data?

Let’s dive into the ethical dilemmas of generative AI solutions by exploring the fine line between innovation and ethical consideration.

Misinformation and deepfakes

Before the rise of generative AI, the main risk was widespread misinformation, particularly on social media. Content manipulation through tools like Photoshop could be easily detected using provenance or digital forensics. However, genAI elevates the threat of misinformation by creating convincing fake text, images, and audio at a low cost. It has led to significant concern about the proliferation of ‘deepfakes’ – AI-generated synthetic media replacing individuals in existing images or videos with someone else’s likeness.

Generative AI can create false news articles, fake social media profiles, and entirely artificial online identities, contributing to misinformation dissemination, disrupting social harmony, and eroding trust in public conversations. Also, the ability to generate personalized content based on individual data introduces new opportunities for manipulation, such as AI voice-cloning scams, and poses ongoing challenges in fake detection. For instance, there have been cases where deepfakes were used to make it seem like politicians said or did things they never actually said or did.

We need genAI tools to detect AI-generated false content, strong laws to prevent misuse, and public awareness to spot AI-generated misinformation.

For instance, tech giants such as Intel introduced a real-time deepfake detector named FakeCatcher, capable of identifying deepfakes while they’re viewed, potentially preventing their spread. Meanwhile, Microsoft has developed technologies to authenticate digital content, ensuring its accuracy and reliability for online consumption.

Hallucinations

We have all hallucinated shapes and faces in clouds and on the moon, mostly individual interpretations (let’s be honest – misinterpretations!). AI hallucinations are somewhat similar. In AI, these misinterpretations result from data overfitting, training data biases or inaccuracies, incorrect decoding, a lack of identifiable patterns, and complex model structures.

For instance, a significant hallucination error cost Google Bard a $100 billion loss in market value. Bard falsely asserted that the “James Webb telescope captured the initial images of ‘exoplanets,’ whereas the European Southern Observatory’s telescope had actually taken those pictures in 2004!

Clearly, such hallucinations pose significant AI ethical issues, especially when users depend on AI for precise information or when businesses employ AI to engage with customers.

The key distinction between hallucinations and deep fakes is their nature, intent, data sources, and verifiability. Hallucinations are unintentional imaginative outputs generated by AI models, while deep fakes involve deliberately manipulating existing content to deceive.

Exacerbation of pre-existing bias

Content generators rely on Large Language Models (LLMs) trained on vast amounts of existing information and data from various sources on the internet.

Generative AI models operate based on their training data. But what if these models are fed data that reflects deeply rooted societal biases?

GenAI will magnify these biases by producing biased outputs. These biases typically reflect existing societal prejudices and might include racist, sexist, or ableist content within online communities.

Algorithms or Models can pick up unfair ideas that have been around in society for a long time. They do this by looking at lots of messy information, like extensive collections of words. Sometimes, it’s hard to know where these unfair ideas originated. Facial recognition algorithms may exhibit biases, leading to misidentifications and higher error rates for particular racial or ethnic groups.

For instance, a generative AI system trained predominantly on male-authored text might disproportionately represent a male perspective. Similarly, using historically biased hiring data might result in favoritism toward specific demographics, perpetuating historical injustices.

Another instance of bias is when Amazon, in 2018, had to discard an AI recruiting tool because it exhibited bias against women. Trained on resumes submitted over ten years, the tool favored male candidates as most resumes in its dataset were from men.

These examples underscore the ethical imperative of ensuring fairness in AI systems. Careful curation and balancing of training data and continuous bias monitoring in outputs are crucial.

Copyright infringements

When generative AI tools create images, codes, or videos, they might refer to sources in their training data, potentially violating intellectual property or copyrights.

Consequently, an ethical concern surrounding genAI revolves around uncertainties regarding the authorship and copyright of AI-generated content, determining ownership rights, and permissible generative AI use. For instance, if a generative AI tool produces music akin to a copyrighted song in the music industry, it could lead to expensive lawsuits and public criticism.

This concern revolves around three primary questions:

- Eligibility for copyright protection

- Use of copyrighted generated data for training

- Ownership rights over the created content

Legal disputes have emerged, like Andersen v. Stability AI et al. in late 2022, where artists sued AI platforms for using their work without permission.

These days, LLMs divulge sensitive or personal data from their training data. This is evident as around 15% of employees are jeopardizing business data by frequently inputting company information into genAI tools. An Amazon lawyer has advised employees against sharing code with the generative AI chatbot to address this.

Measures Taken by Large Economies and Corporations

Despite definitive and coordinated actions by governments worldwide, such as the U.S. and the EU, to mitigate risks and pledge responsible AI use, recent data indicates that businesses have not similarly adopted clear policies regarding its utilization.

Several countries, including Europe and China, have taken proactive steps to enact laws addressing different facets of the development and utilization of generative AI. For instance, China’s Generative AI regulatory framework law pertains to the research, development, and utilization of genAI products intended for public service provision. These measures include practices like-

- Watermarking the final product

- Conducting audits on algorithms

- Obtaining users’ consent before utilizing their data to train generative AI models

Though Microsoft, Google, and the EU have introduced best practices for responsible and trustworthy AI development, they emphasize that the rapid expansion and impact of new genAI technologies require additional guidelines due to their unchecked growth.

Envisioning a Future with Trustworthy Generative AI: Shaping an AI Technology that Benefits All Humanity

According to Gartner, by 2026, organizations adopting AI models focusing on transparency, trust, and security aim for a 50% improvement in adoption, meeting business goals, and gaining user acceptance, implying a heightened need to focus on trustworthy generative AI systems.

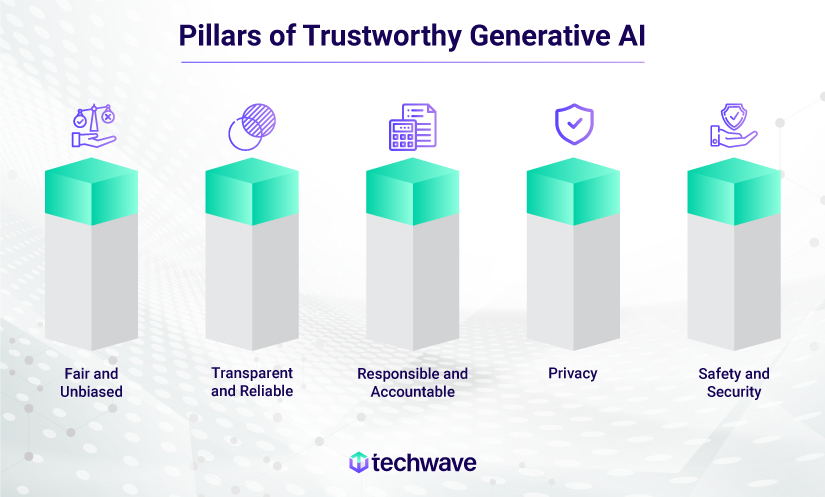

Trustworthy genAI refers to an AI system meticulously crafted, created, and put into operation with a human-centered approach and in partnership with various stakeholders. This framework ensures that AI respects human values, upholds rights, and follows ethical standards, providing reliable outputs.

Trustworthy AI encompasses three key components that AI teams must uphold across the system’s lifespan:

- Legal compliance: Adhere to all relevant laws and regulations.

- Ethical standards: Ensure alignment with ethical principles and values.

- Robustness: Prevent unintended harm despite good intentions, technically and socially.

Why Embracing Trustworthy AI Matters?

Companies that adopt trustworthy AI are not only positioned for commercial success but also tend to fulfill the human inclination to contribute positively, respond to external influences, meet market expectations, and anticipate legal demands.

Ensuring fairness and instilling trust in the AI system necessitates human-centered aspects such as explainability, interpretability, reliability, and accountability. The responsibility to make AI more responsible and explainable lies with humans.

Considerations for Building Responsible and Trustworthy Generative AI

Responsible development of genAI is a collective duty involving stakeholders across the board. Each individual holds a crucial part in guaranteeing ethical AI use. The following are essential principles to ensure the ‘trust’ and ‘worth’ of generative AI.

Fair and Unbiased

Trustworthy AI necessitates a fair and consistent decision-making process devoid of discriminatory biases – visible or latent. These biases are easily observed and quantified, typically related to specific features within the dataset. For instance, a financial institution employing AI to assess mortgage applications might uncover biases based on race, gender, or age within its algorithm, leading to unfair treatment.

Trustworthy AI necessitates a fair and consistent decision-making process devoid of discriminatory biases – visible or latent. These biases are easily observed and quantified, typically related to specific features within the dataset. For instance, a financial institution employing AI to assess mortgage applications might uncover biases based on race, gender, or age within its algorithm, leading to unfair treatment.

On the other hand, hidden biases are more challenging to detect, quantify, or measure. For instance, a computer-vision model might exhibit bias towards background textures when identifying objects in the foreground. Similarly, a face detection model might demonstrate bias concerning a person’s gender or skin color.

Microsoft, Face++, and IBM initially provided face recognition services with higher accuracy for identifying gender in light-skinned men but lower accuracy for darker-skinned women. This occurred due to imbalanced training data, favoring images of males and individuals with lighter skin. Subsequent improvements were made to address these disparities.

Transparent and Reliable

Transparency reinforces trust, and disclosure is the best way to promote transparency. It means ethical AI solutions must use algorithms that are not hidden or imperceptible.

Consider a healthcare firm using AI for brain scan analysis and treatment recommendations; trustworthy AI ensures consistent and reliable outcomes, vital when lives are at stake!

Responsibility and Accountability

Trustworthy AI systems require clear policies defining responsibility and accountability for their outcomes. Blaming technology alone for errors or poor decisions is insufficient—not for the affected individuals and certainly not for regulatory bodies; accountability falls in the jurisdiction of all parties involved. This concern will likely heighten with the rapid proliferation of AI in crucial applications like disease diagnosis, wealth management, and autonomous driving.

Consider a scenario where a driverless vehicle is involved in a collision. Determining responsibility for the damage becomes crucial—Is it the driver, the vehicle owner, the manufacturer, the AI programmers, or the CEO? Clarifying these responsibilities is imperative in the realm of AI’s expanding applications.

Privacy

Privacy is a significant concern across data systems, but it becomes especially crucial in AI due to its reliance on highly detailed and personal data for generating sophisticated insights. Trustworthy AI adheres strictly to data regulations and employs data solely for specified and agreed-upon purposes.

For AI to be trustworthy, it must safeguard privacy at all stages, not just of the raw data but also of the insights derived from it. Data ownership belongs to its human creators, and AI should prioritize maintaining the utmost integrity in preserving privacy.

The realm of AI privacy frequently transcends a company’s internal operations. For example, recent headlines highlight the privacy issues surrounding the audio data AI assistants collect. These controversies revolve around concerns regarding vendors’ and partners’ access to the data and debates over whether the data should be shared with law enforcement agencies.

Safety and Security

Trustworthy AI advocates rigorous testing for scalability and safety conducted in complex simulations that mirror real-life environments.

AI software needs safeguarding from potential exploits by adversaries like hacking. Attacks can target data, models, or infrastructure, altering system behavior or causing shutdown. Malicious intent or unexpected events can also corrupt systems and data. For instance, if a financial system powered by AI gets hacked, it could lead to data breaches, financial loss, and reputational damage.

A reliable generative AI framework is a coveted dream for ethicists. These considerations or principles transform them into reality by simplifying the creation of trustworthy genAI.

In addition, effective data governance is pivotal in establishing trustworthy Artificial Intelligence (AI) systems. It is the bedrock for successful AI initiatives, ensuring the data’s reliability, consistency, and accuracy for training and validating AI models.

As genAI marks a significant advancement in AI technology, it greatly enhances its utility for the financial and payment industry, which is gradually embracing its adoption. In the business payment sphere, AI expedites invoice processing, minimizing errors and notably enhancing the payment procedure, establishing a new standard for efficiency.

With the right partner to ensure a responsible AI system, genAI becomes a strong contender in the realm of digital transformation. Techwave is at the forefront of advancing payments through Generative AI services , elevating transactions to an unprecedented level.

To learn more about our services, feel free to contact us .